Feminism, at its core, is about the transformation of power—but how do you know that’s happening at the organizational level? How can you understand the core drivers of that transformation? How can your own process of evaluating that transformation democratize the evaluators’ power?

Taylor Witkowski and Amy Gray are evaluation and learning specialists at Oxfam America, designing and testing feminist monitoring, evaluation and learning processes for a gender justice-focused organizational change initiative.

WHAT IS FEMINIST EVALUATION?

Everything is political – even evaluations.

Traditional evaluations, even when using participatory methods, prioritize certain voices and experiences based on gender, race, class, etc., which distorts perceptions of realities. Evaluators themselves carry significant power and privilege, including through their role in design and implementation, often deciding which questions to ask, which methodologies to use, and who to consult.

Feminist evaluation recognizes knowledge is dependent upon cultural and social dynamics, and that some knowledge is privileged over others – reflecting the systemic and structural nature of inequality. However, there are multiple ways of knowing that must be recognized, made visible and given voice.

In feminist evaluation, knowledge has power and should therefore be for those who create, hold and share it – therefore, the evaluator should ensure that evaluation processes and findings attempt to bring about change, and that power (knowledge) is held by the people, project or program being evaluated.

In other words, evaluation is a political activity and the evaluator is an activist.

APPLYING FEMINIST EVALUATION TO ORGANIZATIONAL TRANSFORMATION AT OXFAM AMERICA

Oxfam America is seeking to understand what it means to be a gender just organization—from the inside out.

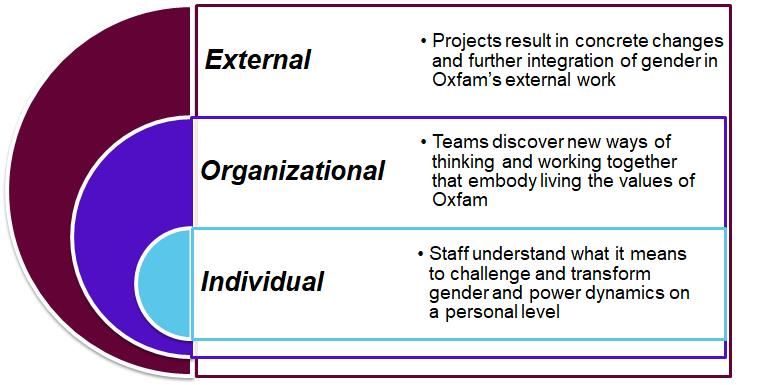

In order to do this, Oxfam America recognizes that a holistic, multi-level approach is required. We believe that transformational change begins at the individual level and ripples outwardly into the organizational culture and external-facing work (Figure 1).

(Figure 1)

This is why we are investing in a feminist approach to monitoring and evaluation—because even though feminist values—adaptivity, intersectionality, power-sharing, reflexivity, transparency—seem like good practice, without mainstreaming and operationalization they would not be fully understood or tied to accountability mechanisms at the individual, organizational or external levels.

Therefore, as evaluators, we are holding ourselves accountable to critically exploring and implementing these values in our piece of this process. The foundational elements of this emergent approach include:

- Power-Sharing: The design, implementation and refinement of the monitoring and evaluation framework and tools are democratized through participatory consultations with a range of stakeholders—a steering committee, the senior leadership team, board members, gender team and evaluation team.

- Co-Creation: Monitoring includes self-reported data from project contributors as well as process documentation from both consultants and evaluation staff, and data is continually fed into peer validation loops for ongoing reflection and refinement.

- Transparency: Information regarding the monitoring and evaluation framework, approach and activities are communicated and made accessible to staff on a rolling basis as they evolve.

- Peer Accountability: Monitoring mechanisms that capture failures and the cultivation of peer-to-peer safe spaces to discuss them create new opportunities for horizontal learning and growth. This includes a social network analysis (SNA) of perceived power dynamics within teams (contributed by team members via an anonymous survey), followed by a group discussion in which they reflected on the visual depiction of their working dynamics through the lens of hierarchy and intersectionality.

EVALUATORS AS ACTIVISTS

As the monitoring, evaluation and learning (MEL) staff working on this initiative, we recognize that we have an opportunity to directly contribute to change. We therefore see ourselves as activists, ensuring MEL processes and tools share knowledge and power as well as generate evidence that reflects diverse realities and perspectives, and can be used for accountability and learning at multiple levels. As a result of this feminist approach to MEL, participating Oxfam staff can see and influence organizational transformation.

How have you used feminist evaluation in your work? Do you have any tips, resources or lessons learned you’d like to share? Do you think this would make a good roundtable discussion?

CONTACT

RESOURCES